Critical Requirements for Modern API Monitoring

Enterprises lose millions annually due to API outages and performance degradation. Modern observability strategies are crucial to mitigate these risks.

Today, almost every system is dependent on APIs. Data integration, authentication, payment processing, and many other functions rely on multiple reliable and performant APIs. Banks around the world, for example, have adopted the Open Banking API for payments, credit scoring, lending origination, fraud detection, and lots more.

APIs are everywhere—and critical to everything

APIs are the internal workers of the internet. Connecting to a single website, using a business application like an ATM, or a mobile app likely means dozens if not hundreds or even thousands of API calls. Each one has the possibility to impact the overall service: if it’s slow, the service might be slow. If it returns an error, the service may fail. Understanding the interaction between your services and the APIs they consume is critical to making your services resilient.

There are different ways to monitor APIs. The minimum that every system should use is proactively monitoring, measuring, and testing your critical APIs – both your own as well as third party APIs. API monitoring systems have been around for some time. From the basic ping to ensure an API is reachable to more advanced multi-step, scripted, proactive monitoring that looks at response time, functional validation, etc. More advanced API monitoring will incorporate Chaos Engineering methodologies, like blocking or simulating an error on particular APIs and observing the impact on the overall system.

API resilience isn’t optional—here’s why

In a world where most applications and systems that are interconnected via APIs are geographically distributed, touching different clouds and traversing multiple points across the internet, simple proactive monitoring is no longer enough. A traditional approach to API monitoring will not only be insufficient, but it may also miss many important incidents and would not be helpful in identifying root cause.

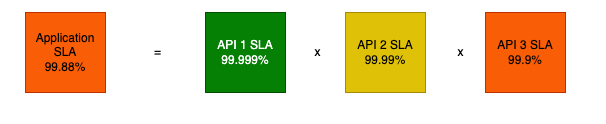

The goal is to have resilient APIs. The formula for resilience is

- Reachability: Can I get to the API from where I am? (Or in the case of APIs, where its consumers are).

- Availability: Is the API functional – does it do what it is supposed to do?

- Performance: Does the API respond within the expected time?

- Reliability: Can I trust the API will be working consistently?

Then, take this formula and combine it for every API used in your system!

System Resilience = minimum resilience across all APIs in use

Let’s illustrate the point:

A system that has 100 APIs with five nines of availability must have five nines of availability from each API! If designed in a resilient way, it’s possible to have individual API dependencies fail without impacting the overall system, but it has to be carefully designed and verified to be resilient.

With this goal in mind, let’s understand the requirements for API monitoring.

What a basic API Monitoring strategy should include

These are the foundational capabilities every API monitoring strategy should include. They help teams detect issues, ensure availability, and validate performance at a basic operational level.

- Response Time: Measures the time taken for an API to respond to a request, helping identify latency issues.

- Error Rate: Tracks the percentage of failed requests to detect anomalies or bugs.

- Throughput: Monitors the number of API requests processed over a specific period to ensure scalability.

- Uptime and Availability: Ensures APIs are consistently reachable and operational.

- Logging: Collect detailed logs of API events, including timestamps, event types (e.g., errors, warnings), and messages, to aid in troubleshooting and post-incident analysis.

- Alerts: Set up alerts based on predefined thresholds or anomalies (e.g., response time exceeding 200ms or error rates surpassing 5%).

- Functional testing: verify that API endpoints return expected results

- CI/CD integration: the ability to integrate monitoring into pipelines (and tools like Jenkins or Terraform) for automated creation and update of tests also known as “monitoring as code”.

- Proactive monitoring: Use of synthetic mechanisms to continuously observe API performance to detect issues as they occur.

- Scripting: Support for scripting standards such as Playwright to enable testing specific customer & API flows.

- Historic data: A minimum of 13-month data retention to enable comparison of performance with the same period a year ago

- High-cardinality data analysis: Analyze detailed data points such as unique user IDs or session-specific information for granular insights into performance trends or anomalies.

- Chaos Engineering: Introduce errors into the system on purpose during a period of low use, or in a non-production environment in order to verify resilience.

Modern, internet-centric API monitoring requirements

Today’s systems, however, demand more than basic uptime checks or response metrics. Modern API monitoring must account for real-world complexity—geography, infrastructure, user experience, and external dependencies. The capabilities below go beyond the basics to provide deep, actionable insight.

- Monitor from where it matters – most monitoring tools have agents in cloud servers, which often have very different connectivity, resources, and bandwidth than real-world systems, and are blind to geographical differences in routing, ISP congestion, etc. It is critical to monitor from all the locations from where a system will consume an API, using an agent that has similar characteristics. It is also important to consider where your intermediate services are. For example, you may test your full application from the customer-facing API from last-mile agents. Then test intermediate microservices from the cloud provider where they're located, and test your back-end API from a backbone agent in the city & ISP where your datacenter is located or use an enterprise agent inside the datacenter.

- Visibility into the Internet Stack – While it is useful to know when an API is unresponsive or slow, it is more powerful to understand why. Modern API monitoring provides insight into anything in the Internet Stack that impacts an API including DNS resolution, SSL, routing, etc. – as well as visibility into the impact on latency and performance introduced by systems such as internal networks, SASE implementations, or gateways.

- Authentication – No modern monitoring system should have hard-coded credentials into a secure API, therefore monitoring systems must support secrets management, OAuth, tokens and modern authentication mechanisms.

- Synthetic Code Tracing - As an API is being tested, collect and understand code execution traces to identify server-side issues including application, connectivity, and database problems.

- Open Telemetry Support – modern observability implementations must support OTel as the standard mechanism to share and integrate data from multiple systems and to provide flexibility.

- Focus on User experience – An API is only a component of a broader overall system performance. A payment API is likely a component of an online purchase transaction. You want to ensure the entire transaction system performs – from the end-user perspective. Ideally, an operations team would be able to see a visual representation of every dependency in the user transaction, from end-user across the internet, network, systems, APIs – all the way into code tracing.

- Broad support for protocols – While many APIs use REST over HTTP, it may be important for your monitoring system to be able to test from both IPv4 and IPv6 agents, as well as support modern protocols like http/3 and QUIC, MQTT for IoT applications, NTP for time synchronization, or even custom or proprietary protocols that your applications use.

Traditional vs modern API monitoring at a glance

The following table summarizes the key differences between legacy API monitoring approaches and modern, Internet Performance Monitoring strategies that support resilience and user experience.

Rethink what API monitoring should do

It is somewhat surprising that the cloud is only 15 years old. As technology and system architecture has evolved, our monitoring has to evolve including API monitoring.

What is today considered “owned” or “on-premises” infrastructure is most likely in a colocation datacenter, relying on a DNS and SSL provider, connected through at least two ISPs, depending on a cloud-based authentication system, connected through a cloud-based security provider and maybe a few other APIs.

We hear all the time “My APM system shows my systems are green, but my users keep complaining”. A monitoring system that only monitors your “on premises” API is not going to be able to spot, diagnose, or provide useful root-cause information to prevent or solve incidents quickly.

To ensure API resilience, enterprises must invest in modern monitoring solutions that provide end-to-end visibility, proactive alerting, and automated remediation capabilities.

Ready to modernize your API monitoring?

- Discover how Catchpoint’s API Monitoring delivers the end-to-end visibility and resilience your users expect.