Consider a world where web applications operate with the efficiency of a well-configured machine and data flows freely between clients and servers without bottlenecks. While hard to achieve in practice, the launch of gRPC and HTTP/3 brings us one step closer.

gRPC is a modern, open-source remote procedure call (RPC) framework that can run anywhere. gRPC is not just another framework; it is an approach to building distributed systems focusing on high-performance inter-service communication. It enables client and server applications to communicate transparently, so you can build connected systems more easily.

HTTP/3 is the next major upgrade to the hypertext transfer protocol(HTTP), the underlying technology that powers network communication. The HTTP/3 standard is being introduced to meet the demands of modern internet usage characterized by high-speed connections and mobile device usage.

gRPC and HTTP/3 are reshaping digital communication, offering more speed, reliability, and flexibility than ever before. This article explores the technical improvements and benefits that these two technologies bring to your applications.

Summary of key gRPC HTTP3 improvements

Why are gRPC and HTTP/3 important?

Before gRPC, protocols like REST, SOAP, and TCP were frequently used for inter-service communication. However, they were inefficient in handling the simultaneous bidirectional data flows required by modern applications. For example, they were not optimized for real-time data streaming, used inefficient message formats, and required client libraries across different programming environments. Developers struggled to implement cross-language services due to lacking a universal protocol.

Google developed gRPC to create a unified, efficient, and language-agnostic framework. It was specifically designed for modern software architectures, like microservices and cloud-based environments, where efficient inter-service communication within the same application architecture is crucial.

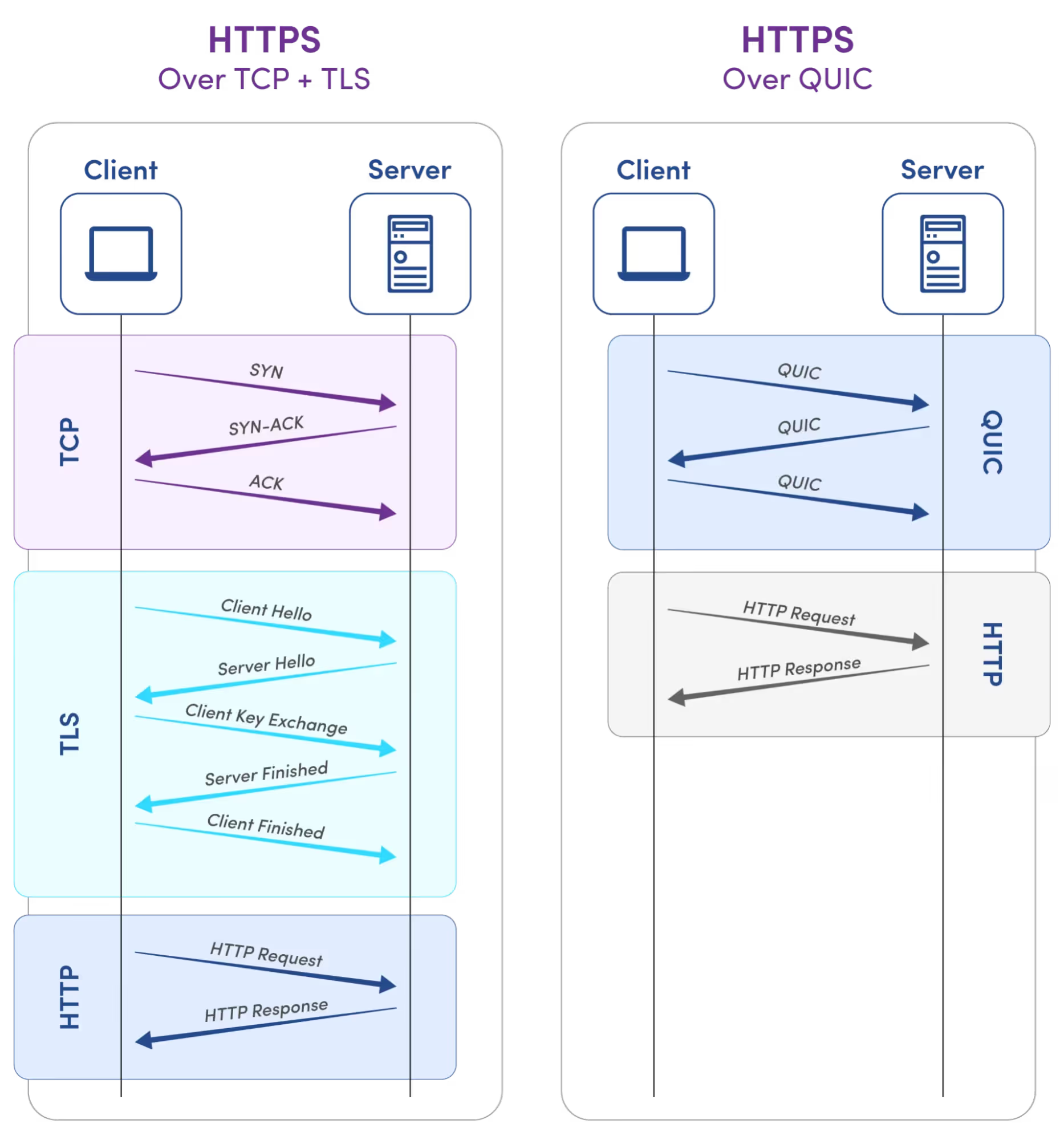

HTTP/3 is also a result of Google's innovation to improve traditional protocols. A few years before gRPC, Google developed Quick UDP Internet Connections (QUIC), a transport layer network protocol to address the latency issues associated with TCP in the context of HTTP/2 connections. HTTP/3 leverages QUIC to introduce a new communication standard for the internet.

Both gRPC and HTTP/3 aim to make data transmission on the web and between services faster and more efficient. However, as HTTP/3 evolved after gRPC in its definition and implementation, gRPC primarily used HTTP/2 since its initial release. Adapting gRPC to HTTP/3's new and different capabilities involves significant development effort.

Although gRPC over HTTP/3 is yet to be standardized, the two technologies are the future of the internet. It is important that developers understand how they work.

Next, let’s look at some technical gRPC HTTP3 improvements and future benefits.

{{banner-9="/design/banners"}}

Serialization and compression

Serialization is a communication process that structures data so that different systems can easily transmit and understand it. Compression refers to reducing the size of the data to facilitate faster transmission speeds and reduced storage requirements.

Traditional serialization formats include JSON and XML. Both are text-based formats known for being human-readable but large in size and slow to parse. The larger data sizes result in more communication overheads.

Instead of the above, gRPC uses Protocol Buffers (protobuf) by default. It is a binary format that, while not human-readable, is significantly smaller and faster to encode and decode. Its compact binary representation incorporates both serialization and compression in its design to reduce data overheads.

To understand the impact, consider a simple message defined in a .proto file:

message User {

string name = 1;

int32 id = 2;

bool isActive = 3;

}

When serialized, this message is compacted into a binary format, inherently smaller than its JSON or XML counterparts, leading to faster transmission and processing times. The schema is also automatically compiled into code for various languages via protoc, the protobuf compiler, streamlining the development process.

Data efficiency is not only about how data is serialized but also how it is transmitted. This is where QUIC comes into play under the HTTP/3 protocol. QUIC introduces significant improvements over TCP, summarized in the table below.

When gRPC use of protobuf is combined with HTTP/3 QUIC protocol, it results in a highly efficient, robust communication system. Protobuf's compact, binary serialization format reduces the size of the data being sent, while QUIC’s stream compression and fast connection establishment further speed up data transfer.

For example, distributed gaming platforms use gRPC for client-server communication and protobuf for fast, compact message exchange. With HTTP/3, the gaming experience will be smoother, even under poor network conditions

{{banner-15="/design/banners"}}

Multiplexing and streams

Web applications must simultaneously handle multiple requests and responses for high performance and improved user experience. This is where the concepts of multiplexing and streams become critical.

Multiplexing is a technique that allows multiple messages to be sent over a single connection without waiting for each one to complete before starting the next. HTTP/3 implements multiplexing through QUIC, which manages multiple data streams concurrently over a single connection. This approach mitigates the issue known as head-of-line blocking, which is common in traditional approaches. The loss of a single packet blocked the entire TCP connection, even if the blocked data is unrelated to most of the in-progress requests.

The benefits of gRPC HTTP/3 in multiplexing are summarized below.

For example, consider a microservices architecture where a single user action triggers multiple backend services. With gRPC and HTTP/3, these calls can happen in parallel over the same connection, drastically reducing the response time and improving the user experience. HTTP/3 allows stream prioritization, meaning clients can indicate which streams are more important than others. This ensures that critical requests are processed and responded to first, further optimizing the performance of web applications.

Resiliency

Resiliency ensures services remain available, reliable, and consistent despite changes in network conditions or infrastructure disruptions. Both gRPC and HTTP/3 offer features that significantly enhance the resiliency of web communication.

HTTP/3 introduces the concept of connection migration, essential in maintaining stable connections even when a client's network environment changes. Consider a client switching networks. Without HTTP/3, the switch has a dropped connection, requiring a complete re-establishment process. With HTTP/3, the connection persists, and only minimal adjustments are needed to accommodate the new network path because of the unique connection ID that QUIC uses.

For example, we implement a simple retry logic in gRPC.

import json

import grpc

json_config = json.dumps(

{

"methodConfig": [

{

"name": [{"service": "<package>.<service>"}],

"retryPolicy": {

"maxAttempts": 5,

"initialBackoff": "0.1s",

"maxBackoff": "10s",

"backoffMultiplier": 2,

"retryableStatusCodes": ["UNAVAILABLE"],

},

}

]

}

)

address = 'localhost:50051'

channel = grpc.insecure_channel(address, options=[("grpc.service_config", json_config)])You can use monitoring tools like Catchpoint to analyze the retry attempts, response times, and overall error rates of gRPC calls. By providing real-time data and historical analysis, Catchpoint helps you identify patterns that could indicate underlying network issues or the need for adjustments in the retry strategy.

Automatic code generation

One of gRPC's best features is its support for automatic code generation across multiple programming languages. Developers can define their service methods and message types once in a .proto file and automatically generate client and server code. This not only saves time but also ensures consistency and type safety across different parts of an application.

Defining a gRPC service:

syntax = "proto3";

package unary;

service Unary{

rpc GetServerResponse(Message) returns (MessageResponse) {}

}

message Message{

string message = 1;

}

message MessageResponse{

string message = 1;

bool received = 2;

}Using the protoc compiler, this service definition generates client and server code stubs that handle the underlying communication details, letting developers focus on implementing the business logic. For detailed code, follow the official gRPC documentation.

Consider, for example, a global e-commerce platform that utilizes gRPC for its microservices architecture and HTTP/3 for its communication protocol. The development team, distributed across multiple continents, must frequently update the product catalog service to handle new product launches and updates. Before gRPC, developers manually wrote client and server code for each service, a time-consuming and error-prone process.

If the team switches to gRPC HTTP/3, developers only have to update the .proto files and regenerate the client and server code, significantly reducing development time and the potential for errors. The transition to HTTP/3 will also improve the platform's responsiveness, particularly for users in regions with less reliable internet connections. The e-commerce platform will see decreased page load times, increased user engagement, and reduced shopping cart abandonment rates.

{{banner-2="/design/banners"}}

Adaptive streaming

The demand for high-quality streaming services, from video conferencing to live broadcasting, is increasing. Users expect high-definition experiences regardless of their network conditions or geographical locations. Adaptive streaming adjusts the quality of video or audio content in real-time based on the user's current network conditions. Your application can dynamically switch between different-quality streams as bandwidth fluctuates.

gRPC and HTTP/3 support adaptive streaming. HTTP/3 reduces the time it takes to establish connections and deliver data, which is crucial for real-time interactions in applications like video conferencing. gRPC combined with HTTP/3 multiplexing capabilities allow for continuous data flows without blocking. The efficiency of gRPC in data serialization and the reduced overhead of HTTP/3 allow for higher data throughput, enabling better-quality video and audio streams.

Steps for implementation:

- Split media content into small, downloadable chunks, each available in different quality levels.

- Monitor network performance and select the appropriate quality level for the next chunk, aiming for the highest quality without buffering.

Code example below.

def select_stream_chunk(available_qualities, network_speed):

sorted_qualities = sort_qualities(available_qualities)

for quality in sorted_qualities:

if quality.bandwidth <= network_speed:

return quality.chunk_url

return sorted_qualities[0].chunk_urlMonitor metrics like bandwidth usage, buffering events, and playback interruptions to check if the adaptive streaming logic works as expected. This will help you identify and resolve issues that degrade the user experience. Monitoring performance across different regions also highlights areas where additional optimizations are needed, such as deploying more CDN nodes.

Catchpoint's second-to-none network experience solution enables IT and Network Operations professionals to ensure users can always navigate the digital service delivery chain while being protected from critical events, whether local or distributed. It easily lets you set up proactive observability for the Internet’s most critical services, including BGP and third-party services like CDN and DNS.

Security

Integrating gRPC and HTTP/3 technologies offers a framework for securing data in transit using advanced encryption and authentication. HTTP/3 uses Transport Layer Security by default for encrypting data in transit, providing a secure channel for client-server communications. gRPC also supports mutual TLS for client and server authentication, ensuring that both parties are verified.

HTTP/3 encrypts the payload and the packet headers, offering an additional layer of privacy and security. By combining transport and cryptographic handshakes, HTTP/3 reduces the time required to establish secure connections. Encrypting packet headers helps protect against fingerprinting and tracking by obfuscating transport-layer information.

Conclusion

While HTTP/2 introduced multiplexing, header compression, and server push, enhancing performance over HTTP/1, it has limitations for modern application use cases. gRPC and HTTP/3 offer solutions that improve web applications' global reach and responsiveness.

Integrating gRPC and HTTP/3 into streaming architectures doesn't just address current demands, it sets the stage for the next generation of interactive, real-time applications. From virtual reality experiences to live events, developers can build applications capable of delivering high-quality content to a global audience adapting in real time to each user's network conditions.

Monitoring solutions are essential to ensure these technologies deliver on their promise. Tools like Catchpoint offer detailed insights into application performance across various regions, allowing teams to identify and address region-specific challenges.